Generative AI for Investment Management & Due Diligence

This is a three part series on Generative AI (Gen AI). The first part covers the opportunities and risk, the second part discusses Gen AI applications for asset allocators and asset managers, and the last part would be Gen AI applications for a topic close to our heart – due diligence where we also introduce Gen AI DDQs.

Please refer to definitions of terms used in this blog series at the end of Part 1

Part 1: Generative AI – An Era of New Opportunities and New Risks

Generative AI is undergoing a hype cycle because of its transformative power which is pervasive applications across many industries and its ability to improve rapidly. It is in the category of general purpose technology, similar to electricity and the internet with widespread societal impact.Just imagine life before and after the internet or electricity! According to Mckinsey, Generative AI’s impact on productivity gain is equivalent to $2.6 to $4.4 trillion annually across the 63 use cases analyzed.

At DiligenceVault, we have been an AI application company with NLP applications around making the diligence response process easy. Generative AI has amplified the impact of AI by extending it from repetitive logical reasoning and analytical tasks to more generative and creative domains, and making the technology architecture easier to implement.

So with this backdrop, what is expected next is a tsunami of Generative AI applications across many industries by technology providers. However, we argue that this tsunami will have a longer arc because there’s significant R&D and risk considerations before seeing a scalable application. Let’s evaluate the reasons why:

- Context Training

For the industry-specific applications to generate high quality results, the models will need contextual training. Let’s look at the example below where Anand on our client success team asked the question: “What is the best Due Diligence Platform?”.

While we love that DV is one of the highly regarded due diligence platforms, ChatGPT is combining a digital diligence network with two virtual data room offerings. With contextual training this may have picked different offerings.

- Source Data & Intellectual Property

Extending the importance of context further, having the right training dataset is crucial. Many firms do not have the data needed to train the foundational models, WSJ captures some of that narrative in their recent article with inputs from venture capitalists who are investing in Generative AI firms.

For firms that have datasets, it’s clearly a competitive advantage. However, data is part of the client’s intellectual property (IP), and firms need to manage the client IP by training a different model for each client on their specific dataset.

- Data Privacy & AI Regulation

One of the widely discussed risk factors is how Generative AI models can put Personal Identifiable Information (“ PII”) at risk, whether it’s exposing companies PII or client’s PII in the process. There are firms which are creating solutions to obfuscate PII without impacting the model’s understanding.

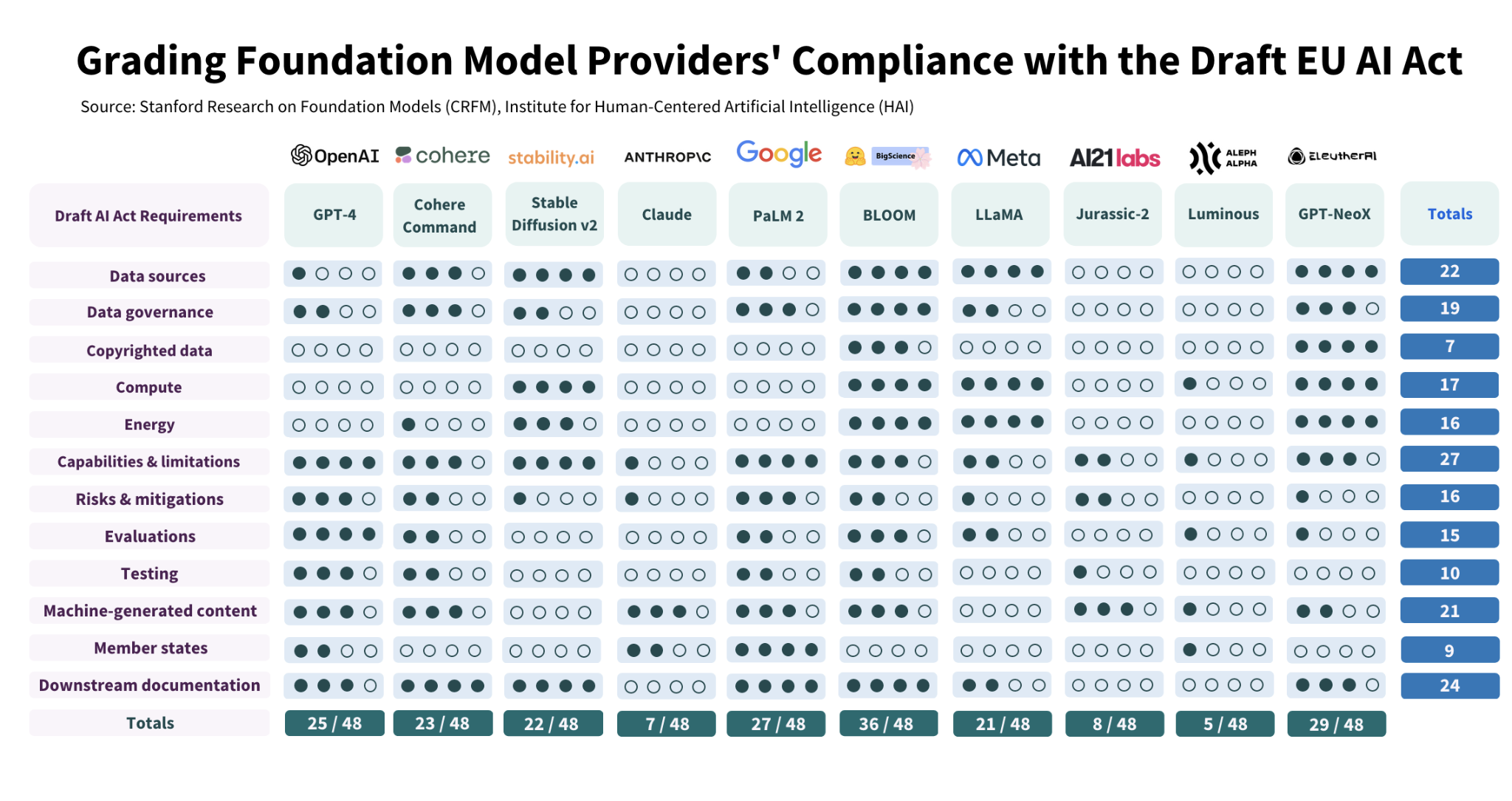

Next, the upcoming European Union (EU)’s AI Act will be the world’s first comprehensive regulation to govern AI. Stanford’s research team analyzed components of four categories of this regulation: Data, Compute, Model and Deployment and provided a compliance score for leading foundational models.

While no foundational model scores consistently high across all factors, Hugging Face leads with the overall score. This will be a space to watch to assess compliance for widespread applications.

- ESG Considerations

Extending Stanford’s research, when evaluating against responsible AI and ESG criteria, none of the models are rated high in these areas:

- Use of copyrighted data for training and consequent liability,

- Energy usage is not reported consistently,

- The evaluation of intentional harms and misuse by bad actors is not addressed by existing models, and all the models score low.

This will be another area to focus for firms who have sustainability and ESG reporting as a priority.

- Cost of AI Compute

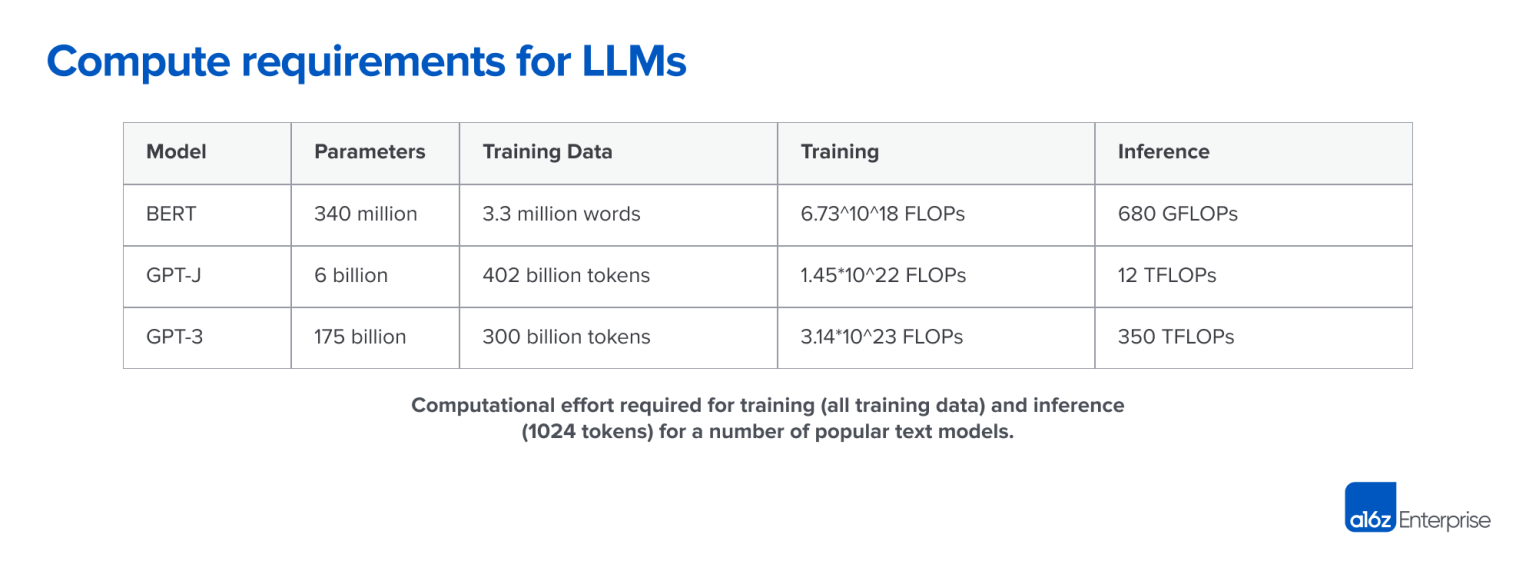

The commercialization arc of Generative AI means that there is a significant investment required early. The compute power to train models and derive inference is significant. Per A16z, companies are spending more than 80% of their total capital raised on compute resources!

The firms deploying LLMs for commercial applications will go down two paths:

a) One who can leverage hosted service models such as OpenAI or Hugging Face. This cohort will have an additional risk consideration of intellectual property infringement.

b) Another cohort will need to train their own foundational model for a specific vertical with guard rails around data privacy and security, however this will be cost intensive. This strategy will create a moat in the short run for firms that are able to deploy capital to invest in building AI architecture.

- Commercial UX

To replicate ChatGPT like UX within an industry specific application, it is important to carefully coordinate speed, quality and cost vectors. Not all applications would be commercially viable – users may not see the cost benefit advantage of some applications, while in other applications users may want instant output vs delays tied to inference.

A very important factor we know about Generative AI is that it’s not always accurate. The best quote I have seen about it is “Generative AI is like a patient intern who lies once in a while”. So there needs to be a review layer to assess the Generative AI output, and a UX that simplifies that process would be crucial.

- Bias

Generative AI can create offensive, inappropriate, or biased content which is a significant reputational risk factor. The source of bias can be from the source data used to train the foundational modal, the modals themselves, as well as assumptions made during development by the creators. So there needs to be additional bias testing and greater focus on explainability.

- New Cybersecurity Threat Vectors

Prompt injection is one of the new risk factors which like SQL injection needs to be researched further.

Further, the power of Generative AI can be misused to create code to sidestep antivirus, firewalls and other security controls, as it makes it easier for the attackers, and makes them more sophisticated.

The Exciting Long Arc of Generative AI

While we have discussed several risks and costs associated with Generative AI, we are bullish that the costs are a fraction of the long term productivity benefits. A successful and scalable solution will not be created in isolation and in a rush. It will require extensive research, scalable AI architecture, data privacy, prompt engineering and cyber risk guard rails, UX considerations, and deep client collaboration through this R&D process.

What’s more, our clients also share our excitement and we are having discussions every few days about most valuable applications, and are readying a beta user group of clients and prospective clients.

Stay tuned for upcoming Part II: Generative AI Applications for Asset Owners & Asset Allocators and Part III: Generative AI Applications for Due Diligence.

References:

Andreessen Horowitz

Mckinsey

Stanford

WSJ

Definitions:

AI – Artificial Intelligence

NLP – Natural Language Processing

Transformer Model – a neural network that learns context, and meaning by tracking relationships in sequential data (example : the words in this sentence)

Foundational Model – A foundational model is a large machine learning model trained on a vast quantity of data at scale (self-supervised learning or semi-supervised learning) such that it can be adapted to a wide range of downstream tasks.

LLM – Large Language Models use deep learning neural networks, with large amounts of parameters, and trained on large amounts of data and can recognize, summarize, translate, predict and generate text and other forms of content. LLM is an application of the transformer model.

Chat GPT – AI Chatbot developed by Open AI based on their foundational models GPT-3.5 and GPT-4

General Purpose Technologies – Technologies that can affect an entire economy, and have the potential to drastically alter societies through their impact on pre-existing economic and social structures

Predictive AI – AI capable of predicting and anticipating the future needs or events based on data analysis. This allows, among other things, to see trends coming, or to predict risks and their solutions.

Generative AI – AI technology that can generate various types of content – text, image, audio, synthetic data.

Training – Model training is the phase in the machine learning lifecycle where practitioners try to fit the best combination of weights and bias to a machine learning algorithm to minimize a loss function over the prediction range

Inference – Model’s ability to quickly generate new content based on a variety of inputs. Inputs and outputs to these models can include text, images, sounds, animation, 3D models, or other types of data.

Prompt Engineering – Description of the task that the AI is supposed to accomplish is embedded in the input, e.g. as a question, instead of it being explicitly given.

Further Technical Insights: https://matthewhartman.substack.com/p/what-gpt-actually-means

Read Part 2 of our Generative AI Content Series – https://diligencevault.com/generative-ai-applications-for-asset-investment-and-wealth-management/